Welcome

at RWTH Aachen University!

The Virtual Reality and Immersive Visualization Group started in 1998 as a service team in the RWTH IT Center. Since 2015, we are a research group (Lehr- und Forschungsgebiet) at i12 within the Computer Science Department. Moreover, the Group is a member of the Visual Computing Institute and continues to be an integral part of the RWTH IT Center.

In a unique combination of research, teaching, services, and infrastructure, we provide Virtual Reality technologies and the underlying methodology as a powerful tool for scientific-technological applications.

In terms of basic research, we develop advanced methods and algorithms for multimodal 3D user interfaces and explorative analyses in virtual environments. Furthermore, we focus on application-driven, interdisciplinary research in collaboration with RWTH Aachen institutes, Forschungszentrum Jülich, research institutions worldwide, and partners from business and industry, covering fields like simulation science, production technology, neuroscience, and medicine.

To this end, we are members of / associated with the following institutes and facilities:

| |

|---|---|

| |

| |

| |

Our offices are located in the RWTH IT Center, where we operate one of the largest Virtual Reality labs worldwide. The aixCAVE, a 30 sqm visualization chamber, makes it possible to interactively explore virtual worlds, is open to use by any RWTH Aachen research group.

News

| • |

Martin Bellgardt receives doctoral degree from RWTH Aachen University Today, our former colleague Martin Bellgardt successfully passed his Ph.D. defense and received a doctoral degree from RWTH Aachen University for his thesis on "Increasing Immersion in Machine Learning Pipelines for Mechanical Engineering". Congratulations! |

April 30, 2025 |

| • |

Student researcher opening in the area of Social VR, click here for more information. |

Jan. 15, 2025 |

| • |

Active Participation at 2024 IEEE VIS Conference (VIS 2024) At this year's IEEE VIS Conference, several contributions of our visualization group were presented. Dr. Tim Gerrits chaired the 2024 SciVis Contest and presented two accepted papers: The short paper "DaVE - A Curated Database of Visualization Examples" by Jens Koenen, Marvin Petersen, Christoph Garth and Dr. Tim Gerrits as well as the contribution to the Workshop on Uncertainty Exploring Uncertainty Visualization for Degenerate Tensors in 3D Symmetric Second-Order Tensor Field Ensembles by Tadea Schmitz and Dr. Tim Gerrits, which was awarded the best paper award. Congratulations! |

Oct. 22, 2024 |

| • |

Honorable Mention One Best Paper Honorable Mention Award of the VRST 2024 was given to Sevinc Eroglu for her paper entitled “Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior”. |

Oct. 11, 2024 |

| • |

Tim Gerrits as invited Keynote Speaker at the ParaView User Days in Lyon ParaView, developed by Kitware is one of the most-used open-source visualization and analysis tools, widely used in research and industry. For the second edition of the ParaView user days, Dr. Tim Gerrits was invited to share his insights of developing and providing visualization within the academic communities. |

Sept. 26, 2024 |

| • |

Invited Talk at Visual Computing for Biology and Medicine This year's Eurographics Symposium on Visual Computing for Biologigy and Medicine VCBM in Magdeburg included a VCBM Fachgruppen Meeting with an invited presentation by Dr. Tim Gerrits on "Harnessing High Performance Infrastructure for Scientific Visualization of Medical Data". |

Sept. 20, 2024 |

Recent Publications

Poster: Listening Effort In Populated Audiovisual Scenes Under Plausible Room Acoustic Conditions to be presented at: International Symposium on Auditory and Audiological Research (ISAAR) 2025

Listening effort in real-world environments is shaped by a complex interplay of factors, including time-varying background noise, visual and acoustic cues from both interlocutors and distractors, and the acoustic properties of the surrounding space. However, many studies investigating listening effort neglect both auditory and visual fidelity: static background noise is frequently used to avoid variability, talker visualization often disregards acoustic complexity, and experiments are commonly conducted in free-field environments without spatialized sound or realistic room acoustics. These limitations risk undermining the ecological validity of study outcomes. To address this, we developed an audiovisual virtual reality (VR) framework capable of rendering immersive, realistic scenes that integrate dynamic auditory and visual cues. Background noise included time-varying speech and non-speech sounds (e.g., conversations, appliances, traffic), spatialized in controlled acoustic environments. Participants were immersed in a visually rich VR setting populated with animated virtual agents. Listening effort was assessed using a heard-text-recall paradigm embedded in a dual-task design: participants listened to and remembered short stories told by two embodied conversational agents while simultaneously performing a vibrotactile secondary task. We compared three room acoustic conditions: a free-field environment, a room optimized for reverberation time, and an untreated reverberant room. Preliminary results from 30 participants (15 female; age range: 18–33; M = 25.1, SD = 3.05) indicated that room acoustics significantly affected both listening effort and short-term memory performance, with notable differences between free-field and reverberant conditions. These findings underscore the importance of realistic acoustic environments when investigating listening behavior in immersive audiovisual settings.

|

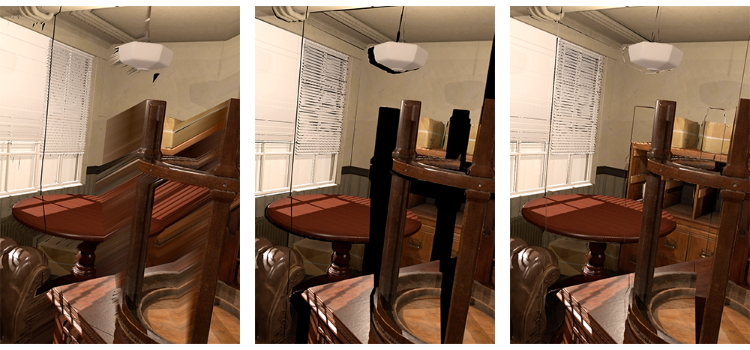

Interactive Streaming of 3D Scenes to Mobile Devices using Dual-Layer Image Warping and Loop-based Depth Reconstruction 2025 International Conferences in Central Europe on Computer Graphics, Visualization and Computer Vision (WSCG 2025)

While mobile devices have developed into hardware with advanced capabilities for rendering 3D gra-phics, they commonly lack the computational power to render large 3D scenes with complex lighting interactively. A prominent approach to tackle this is rendering required views on a remote server and streaming them to the mobile client. However, the rate at which servers can supply data is limited, e.g., by the available network speed, requiring image-based rendering techniques like image warping to compensate for the latency and allow a smooth user experience, especially in scenes where rapid user movement is essential. In this paper, we present a novel streaming approach designed to minimize arti-facts during the warping process by including an additional visibility layer that keeps track of occluded surfaces while allowing access to 360° views. In addition, we propose a novel mesh generation techni-que based on the detection of loops to reliably create a mesh that encodes the depth information requi-red for the image warping process. We demonstrate our approach in a number of complex scenes and compare it against existing works using two layers and one layer alone. The results indicate a significant reduction in computation time while achieving comparable or even better visual results when using our dual-layer approach.

|

Towards Comprehensible and Expressive Teleportation Techniques in Immersive Virtual Environments 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)

Teleportation, a popular navigation technique in virtual environments, is favored for its efficiency and reduction of cybersickness but presents challenges such as reduced spatial awareness and limited navigational freedom compared to continuous techniques. I would like to focus on three aspects that advance our understanding of teleportation in both the spatial and the temporal domain. 1) An assessment of different parametrizations of common mathematical models used to specify the target location of the teleportation and the influence on teleportation distance and accuracy. 2) Extending teleportation capabilities to improve navigational freedom, comprehensibility, and accuracy. 3) Adapt teleportation to the time domain, mediating temporal disorientation. The results will enhance the expressivity of existing teleportation interfaces and provide validated alternatives to their steering-based counterparts.

|